In a concerning development for macOS users, a significant security vulnerability has been discovered in the free version of the ChatGPT app. This flaw has raised alarm among cybersecurity experts and users alike, highlighting the ever-present risks associated with the rapid proliferation of AI-powered applications. As ChatGPT continues to grow in popularity, the implications of such vulnerabilities underscore the importance of stringent security measures in AI software. Here’s a detailed look at the discovered flaw, its potential impact, and the steps being taken to address it.

The Discovery of the Security Hole

How the Vulnerability Was Found

The security hole in the ChatGPT macOS app was discovered by cybersecurity researchers conducting routine checks on popular AI applications. During their analysis, they found that the app had an unprotected API endpoint, which could be exploited by malicious actors to gain unauthorized access to user data.

Nature of the Flaw

The vulnerability allowed attackers to intercept communications between the app and its servers. This interception could potentially expose sensitive user information, including personal conversations, stored data, and even login credentials. The flaw was particularly worrying because it could be exploited remotely, making any user of the app a potential target.

Potential Impact on Users

Data Privacy Concerns

The most immediate concern for users is the potential breach of data privacy. Personal conversations and data exchanged through the ChatGPT app could be accessed by unauthorized parties, leading to a significant privacy breach. For users who rely on ChatGPT for sensitive communications, this could have severe repercussions.

Security Risks

In addition to privacy issues, the vulnerability posed serious security risks. If exploited, it could allow attackers to inject malicious code into the app, potentially compromising the entire system. This kind of access could be used to launch broader attacks on the user’s device, including installing malware or ransomware.

Response from the Developers

Immediate Actions Taken

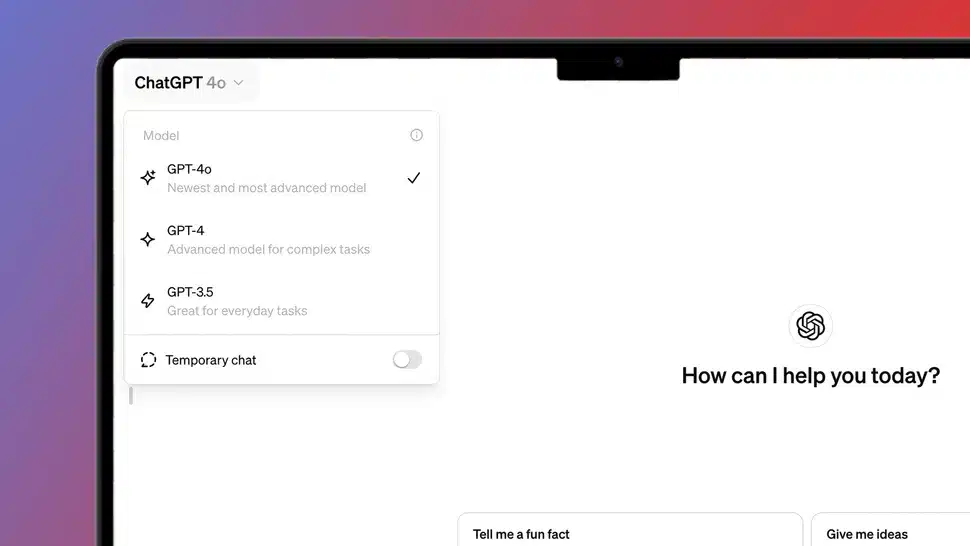

Upon discovery of the vulnerability, the developers of ChatGPT were quick to respond. They issued an emergency patch to close the unprotected API endpoint and urged all users to update their apps immediately. The patch aimed to mitigate the risk by securing the communications channel and ensuring that data exchanges were encrypted.

Long-Term Measures

Beyond the immediate patch, the developers have committed to a thorough review of their security protocols. This includes an audit of the app’s codebase to identify and rectify any other potential vulnerabilities. Additionally, they have announced plans to implement more robust encryption standards and improve overall security features in future updates.

Best Practices for Users

Keeping Software Updated

One of the simplest yet most effective ways for users to protect themselves is to keep their software updated. Ensuring that the latest security patches and updates are installed can prevent many vulnerabilities from being exploited.

Using Strong, Unique Passwords

Users are also advised to use strong, unique passwords for their accounts. This practice can limit the damage caused by a potential breach and reduce the risk of unauthorized access.

Regularly Reviewing App Permissions

Regularly reviewing and managing app permissions can help users maintain better control over their data. Revoking unnecessary permissions can minimize the risk of data exposure.

The Broader Implications for AI Applications

The Need for Rigorous Security Testing

The discovery of this flaw in ChatGPT’s macOS app highlights the need for rigorous security testing in AI applications. As these apps become more integrated into everyday life, the potential risks associated with their use increase. Developers must prioritize security to protect users from evolving threats.

Building Trust in AI Technology

For AI technology to continue growing and gaining acceptance, it is crucial to build and maintain user trust. Ensuring robust security measures and promptly addressing vulnerabilities are essential steps in this process. Users need to feel confident that their data is safe when using AI-powered applications.

Conclusion

The security flaw discovered in the free version of ChatGPT’s macOS app serves as a stark reminder of the importance of cybersecurity in the digital age. While the developers’ swift response and commitment to improving security are commendable, this incident underscores the need for ongoing vigilance and robust security practices. As AI applications continue to advance, ensuring their security will be paramount in protecting users and fostering trust in these powerful technologies.